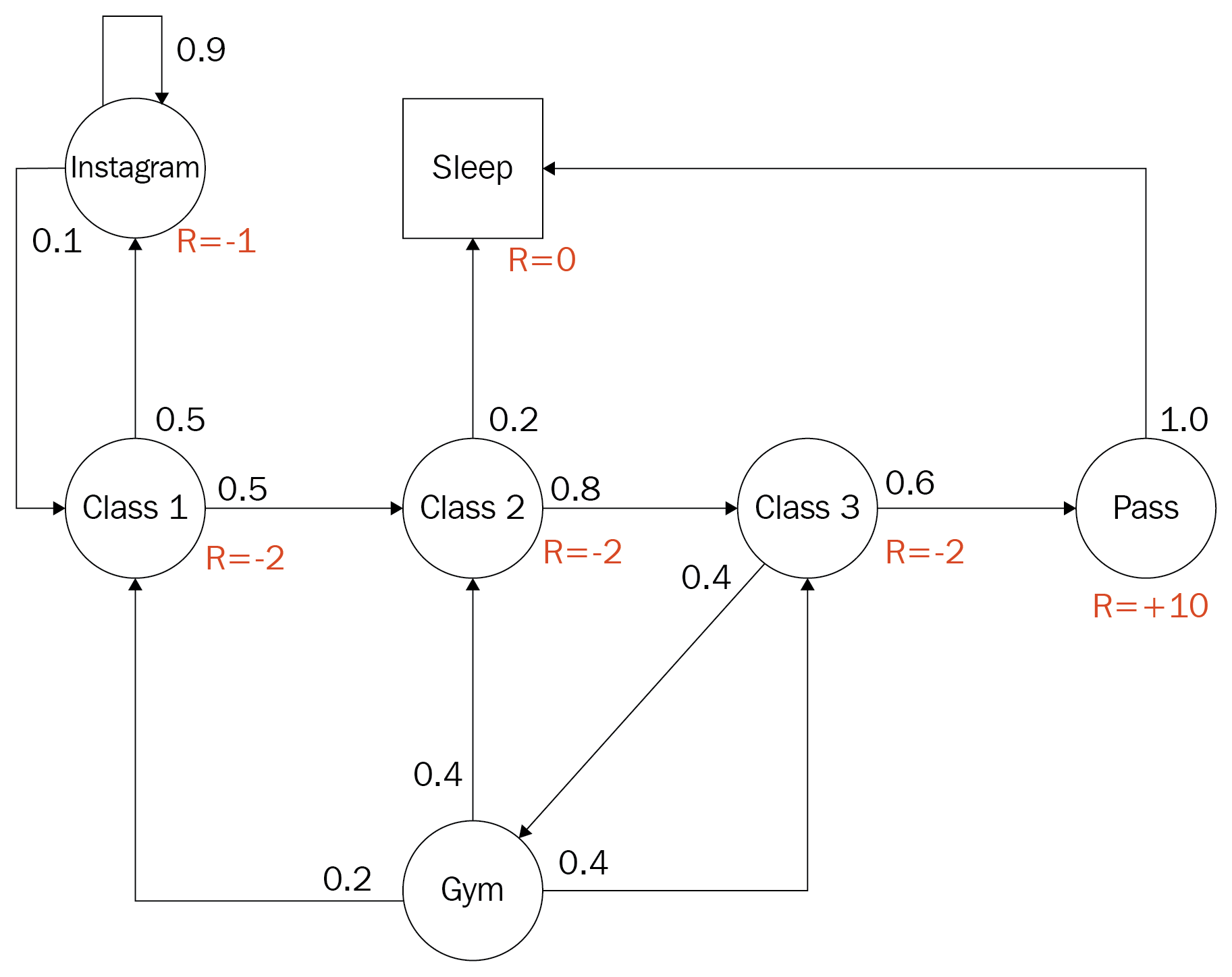

In RL, the agent learns from the environment by interpreting the state signal. The state signal from the environment needs to define a discrete slice of the environment at that time. For example, if our agent was controlling a rocket, each state signal would define an exact position of the rocket in time. State, in that case, may be defined by the rocket's position and velocity. We define this state signal from the environment as a Markov state. The Markov state is not enough to make decisions from, and the agent needs to understand previous states, possible actions, and any future rewards. All of these additional properties may converge to form a Markov property, which we will discuss further in the next section.

-

Book Overview & Buying

-

Table Of Contents

-

Feedback & Rating

Hands-On Reinforcement Learning for Games

By :

Hands-On Reinforcement Learning for Games

By:

Overview of this book

Free Chapter

Free Chapter