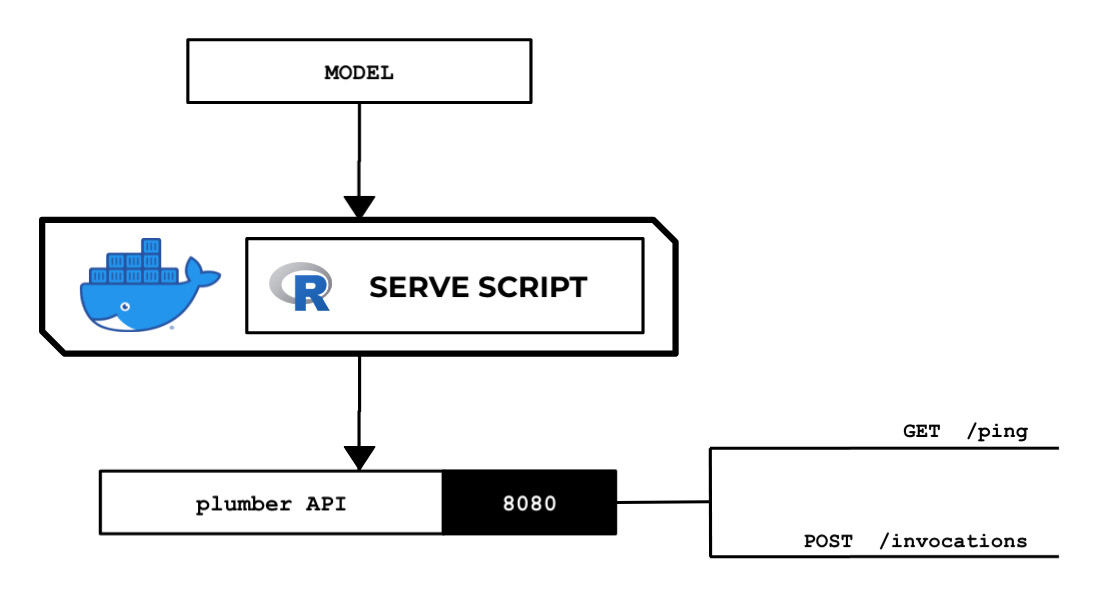

Figure 2.84 – The R serve script loads and deserializes the model and runs a plumber API server that acts as the inference endpoint

- Double-click the

api.r file inside the ml-r directory in the file tree:Figure 2.85 – An empty api.r file inside the ml-r directory

Here, we can see four files under the ml-r directory. Remember that we created an empty api.r file in the Setting up the Python and R experimentation environments recipe:

Figure 2.86 – Empty api.r file

In the next couple of steps, we will add a few lines of code inside this api.r file. Later, we will learn how to use the plumber package to generate an API from this api.r file.

- Define the

prepare_paths() function, which we will use to initialize the PATHS variable. This will help us manage the paths of the primary files and directories used in the script. This function allows us to initialize the PATHS variable with a dictionary-like data structure, which we can use to get the absolute paths of the required files:prepare_paths <- function() {

keys <- c('hyperparameters',

'input',

'data',

'model')

values <- c('input/config/hyperparameters.json',

'input/config/inputdataconfig.json',

'input/data/',

'model/')

paths <- as.list(values)

names(paths) <- keys

return(paths);

}

PATHS <- prepare_paths()

- Next, define the

get_path() function, which makes use of the PATHS variable from the previous step:get_path <- function(key) {

output <- paste(

'/opt/ml/', PATHS[[key]], sep="")

return(output);

}

- Create the following function (including the comments), which responds with

"OK" when triggered from the /ping endpoint:#* @get /ping

function(res) {

res$body <- "OK"

return(res)

}The line containing #* @get /ping tells plumber that we will use this function to handle the GET requests with the /ping route.

- Define the

load_model() function:load_model <- function() {

model <- NULL

filename <- paste0(get_path('model'), 'model')

print(filename)

model <- readRDS(filename)

return(model)

}

- Define the following

/invocations function, which loads the model and uses it to perform a prediction on the input value from the request body:#* @post /invocations

function(req, res) {

print(req$postBody)

model <- load_model()

payload_value <- as.double(req$postBody)

X_test <- data.frame(payload_value)

colnames(X_test) <- "X"

print(summary(model))

y_test <- predict(model, X_test)

output <- y_test[[1]]

print(output)

res$body <- toString(output)

return(res)

}Here, we loaded the model using the load_model() function, transformed and prepared the input payload before passing it to the predict() function, used the predict() function to perform the actual prediction when given an X input value, and returned the predicted value in the request body.

Tip

You can access a working copy of the api.r file in the Machine Learning with Amazon SageMaker Cookbook GitHub repository: https://github.com/PacktPublishing/Machine-Learning-with-Amazon-SageMaker-Cookbook/blob/master/Chapter02/ml-r/api.r.

Now that the api.r file is ready, let's prepare the serve script:

- Double-click the

serve file inside the ml-r directory in the file tree:Figure 2.87 – The serve file inside the ml-r directory

It should open an empty serve file, similar to what is shown in the following screenshot:

Figure 2.88 – The serve file inside the ml-r directory

We will add the necessary code to this empty serve file in the next set of steps.

- Start the

serve script with the following lines of code. Here, we are loading the plumber and here packages:#!/usr/bin/Rscript

suppressWarnings(library(plumber))

library('here')The here package provides utility functions to help us easily build paths to files (for example, api.r).

- Add the following lines of code to start the

plumber API server:path <- paste0(here(), "/api.r")

pr <- plumb(path)

pr$run(host="0.0.0.0", port=8080)

Here, we used the plumb() and run() functions to launch the web server. It is important to note that the web server endpoint needs to run on port 8080 for this to work correctly.

Tip

You can access a working copy of the serve script in the Machine Learning with Amazon SageMaker Cookbook GitHub repository: https://github.com/PacktPublishing/Machine-Learning-with-Amazon-SageMaker-Cookbook/blob/master/Chapter02/ml-r/serve.

- Open a new Terminal tab:

Figure 2.89 – Locating the Terminal

Here, we see that a Terminal tab is already open. If you need to create a new one, simply click the plus (+) sign and then click New Terminal.

- Install

libcurl4-openssl-dev and libsodium-dev using apt-get install. These are some of the prerequisites for installing the plumber package:sudo apt-get install -y --no-install-recommends libcurl4-openssl-dev

sudo apt-get install -y --no-install-recommends libsodium-dev

- Install the

here package:sudo R -e "install.packages('here',repos='https://cloud.r-project.org')"The here package helps us get the string path values we need to locate specific files (for example, api.r). Feel free to check out https://cran.r-project.org/web/packages/here/index.html for more information.

- Install the

plumber package:sudo R -e "install.packages('plumber',repos='https://cloud.r-project.org')"The plumber package allows us to generate an HTTP API in R. For more information, feel free to check out https://cran.r-project.org/web/packages/plumber/index.html.

- Navigate to the

ml-r directory:cd /home/ubuntu/environment/opt/ml-r

- Make the

serve script executable using chmod:chmod +x serve

- Run the

serve script:./serve

This should yield log messages similar to the following ones:

Figure 2.90 – The serve script running

Here, we can see that our serve script has successfully run a plumber API web server on port 8080.

Finally, we must trigger this running web server.

- Open a new Terminal tab:

Figure 2.91 – New Terminal

Here, we are creating a new Terminal tab as the first tab is already running the serve script.

- Set the value of the

SERVE_IP variable to localhost:SERVE_IP=localhost

- Check if the

ping endpoint is available with curl:curl http://$SERVE_IP:8080/ping

Running the previous line of code should yield an OK from the /ping endpoint.

- Test the

invocations endpoint with curl:curl -d "1" -X POST http://$SERVE_IP:8080/invocations

We should get a value close to 881.342840085751.

Compared to its Python recipe counterpart, we are dealing with two files instead of one as that's how we used plumber in this recipe:

Free Chapter

Free Chapter