- Open a Jupyter notebook and add a new cell to import the pandas library and load the dataset into a pandas dataframe. To do so, you first need to import the library, and then use the pd.read_csv() function, as shown here:

import pandas as pd

Link = 'https://github.com/TrainingByPackt/Data-Science-with-Python/blob/master/Chapter01/Data/Banking_Marketing.csv'

#reading the data into the dataframe into the object data

df = pd.read_csv(Link, header=0)

- To find the number of rows and columns in the dataset, add the following code:

#Finding number of rows and columns

print("Number of rows and columns : ",df.shape)

The preceding code generates the following output:

Figure 1.60: Number of rows and columns in the dataset

- To print the list of all columns, add the following code:

#Printing all the columns

print(list(df.columns))

The preceding code generates the following output:

Figure 1.61: List of columns present in the dataset

- To overview the basic statistics of each column, such as the count, mean, median, standard deviation, minimum value, maximum value, and so on, add the following code:

#Basic Statistics of each column

df.describe().transpose()

The preceding code generates the following output:

Figure 1.62: Basic statistics of each column

- To print the basic information of each column, add the following code:

#Basic Information of each column

print(df.info())

The preceding code generates the following output:

Figure 1.63: Basic information of each column

In the preceding figure, you can see that none of the columns contains any null values. Also, the type of each column is provided.

- Now let's check for missing values and the type of each feature. Add the following code to do this:

#finding the data types of each column and checking for null

null_ = df.isna().any()

dtypes = df.dtypes

sum_na_ = df.isna().sum()

info = pd.concat([null_,sum_na_,dtypes],axis = 1,keys = ['isNullExist','NullSum','type'])

info

Have a look at the output for this in the following figure:

Figure 1.64: Information of each column stating the number of null values and the data types

- Since we have loaded the dataset into the data object, we will remove the null values from the dataset. To remove the null values from the dataset, add the following code:

#removing Null values

df = df.dropna()

#Total number of null in each column

print(df.isna().sum())# No NA

Have a look at the output for this in the following figure:

Figure 1.65: Features of dataset with no null values

- Now we check the frequency distribution of the education column in the dataset. Use the value_counts() function to implement this:

df.education.value_counts()

Have a look at the output for this in the following figure:

Figure 1.66: Frequency distribution of the education column

- In the preceding figure, we can see that the education column of the dataset has many categories. We need to reduce the categories for better modeling. To check the various categories in the education column, we use the unique() function. Type the following code to implement this:

df.education.unique()

The output is as follows:

Figure 1.67: Various categories of the education column

- Now let's group the basic.4y, basic.9y, and basic.6y categories together and call them basic. To do this, we can use the replace function from pandas:

df.education.replace({"basic.9y":"Basic","basic.6y":"Basic","basic.4y":"Basic"},inplace=True)

- To check the list of categories after grouping, add the following code:

df.education.unique()

Figure 1.68: Various categories of the education column

In the preceding figure, you can see that basic.9y, basic.6y, and basic.4y are grouped together as Basic.

- Now we select and perform a suitable encoding method for the data. Add the following code to implement this:

#Select all the non numeric data using select_dtypes function

data_column_category = df.select_dtypes(exclude=[np.number]).columns

The preceding code generates the following output:

Figure 1.69: Various columns of the dataset

- Now we define a list with all the names of the categorical features in the data. Also, we loop through every variable in the list, getting dummy variable encoded output. Add the following code to do this:

cat_vars=data_column_category

for var in cat_vars:

cat_list='var'+'_'+var

cat_list = pd.get_dummies(df[var], prefix=var)

data1=df.join(cat_list)

df=data1

df.columns

The preceding code generates the following output:

Figure 1.70: List of categorical features in the data

- Now we neglect the categorical column for which we have done encoding. We'll select only the numerical and encoded categorical columns. Add the code to do this:

#Categorical features

cat_vars=data_column_category

#All features

data_vars=df.columns.values.tolist()

#neglecting the categorical column for which we have done encoding

to_keep = []

for i in data_vars:

if i not in cat_vars:

to_keep.append(i)

#selecting only the numerical and encoded catergorical column

data_final=df[to_keep]

data_final.columns

The preceding code generates the following output:

Figure 1.71: List of numerical and encoded categorical columns

- Finally, we split the data into train and test sets. Add the following code to implement this:

#Segregating Independent and Target variable

X=data_final.drop(columns='y')

y=data_final['y']

from sklearn. model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=0)

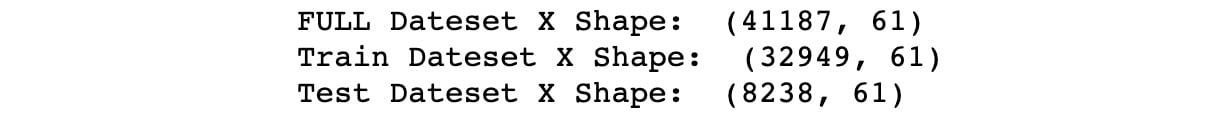

print("FULL Dateset X Shape: ", X.shape )

print("Train Dateset X Shape: ", X_train.shape )

print("Test Dateset X Shape: ", X_test.shape )

The output is as follows:

Free Chapter

Free Chapter