Cloud computing has seen a steep rise in adoption during the last decade. Nevertheless, a significant chunk of businesses hold on to keeping their servers and data on-premises. There are certain reasons why a business may prefer on-premises over the cloud. Some businesses have the perception of increased security when keeping data on their own servers. Others, generally smaller businesses, may not feel the need to optimize their IT landscape or simply are not keen on change. Organizations in strictly regulated industries can be bound to on-premises for compliance. Whichever the reason, on-premises architectures nowadays come with certain challenges.

These challenges include, among other things, the following:

- Scalability

- Cost optimization

- Agility

- Flexibility

Let’s go through these challenges in detail.

Scalability

Organizations with a rapidly enlarging technological landscape will struggle the most to overcome the challenge of scalability. As the total business data volume keeps growing continually, an organization faces the constant need of having to find new ways to expand the on-premises server farm. It is not always as simple as just adding extra servers. After a while, extra building infrastructure is needed, new personnel must be hired, energy consumption soars, and so on.

Here, the benefit of cloud computing is the enormous pool of available servers and computing resources. For the business, this means it can provision any additional capacity without having to worry about the intricate organization and planning of its own servers.

Cost optimization

Businesses that completely rely on on-premises servers are never fully cost-effective. Why is this so?

Let’s take a look at two scenarios:

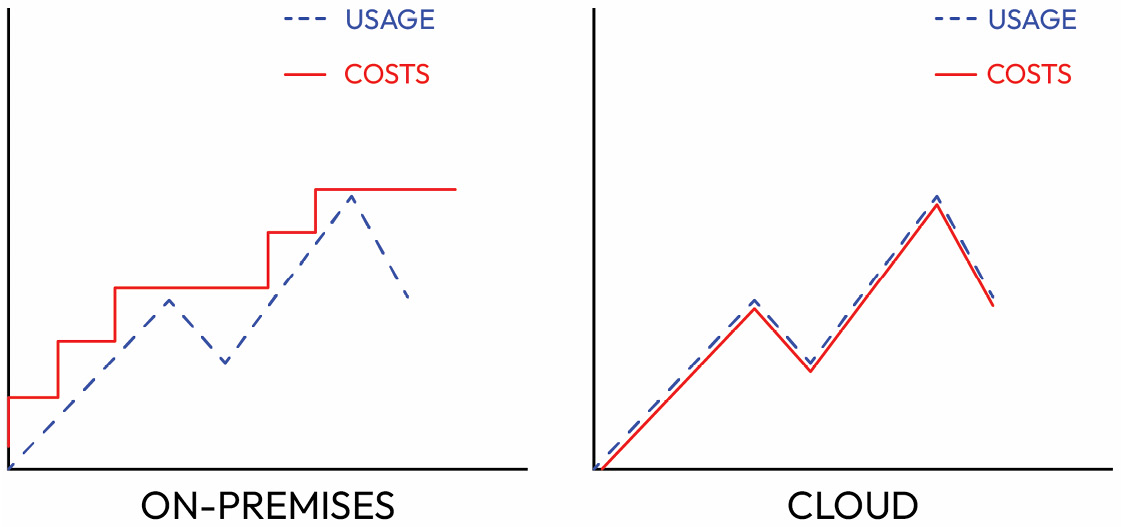

- When usage increases: When the usage increases, the need for extra capacity arises. A business is not going to wait until its servers are used to their limits, risking heavy throttling and bottleneck issues, before starting to expand its capacity. Although the risk of full saturation of its servers is hereby avoided, the computing and storage capacity is never fully made use of. While usage can grow linearly or exponentially, costs will rise in discrete increments, referring to distinct expansions of server capacity.

- When usage decreases: When the usage decreases, the additional capacity is simply standing there, unused. Even if the decrease in usage lasts for longer periods of time, it is not that simple to just sell the hardware, free up the physical space, and get rid of the extra maintenance personnel. In most situations, this results in costs remaining unchanged despite the usage.

Cloud computing usually follows a pay-as-you-go (PAYG) business model. This solves the two challenges of cost optimization during variable usage. PAYG allows businesses to match their costs to their usage, avoiding disparities, as can be seen in the following diagram:

Figure 1.4 – Cost patterns depending on usage for on-premises and cloud infrastructure

Let’s cover the next challenge now.

Agility

In contrast to whether it is possible to make a certain change, agility refers to the speed at which businesses can implement these new changes. Expanding or reducing capacity, changing the types of processing power, and so on takes time in an on-premises environment. In most cases, this involves the acquisition of new hardware, installing the new compute, and configuring security, all of which can be extremely time-consuming in a business context.

Here, cloud architectures benefit from far superior agility over on-premises architectures. Scaling capacity up or down, changing memory-optimized processors for compute-optimized processors: all of this is performed in a matter of seconds or minutes.

Flexibility

The challenge of flexibility can be interpreted very broadly and has some intersections with the other challenges. Difficulties with scalability and agility can be defined as types of flexibility issues.

Apart from difficulties regarding scalability and agility, on-premises servers face the issue of constant hardware modernization. In this case, we could compare on-premises and cloud infrastructure to a purchased car or a rental car respectively. There is not always the need to make use of cutting-edge technology, but if the need is present, think about which option will result in having a more modern car in most situations.

In other cases, specialized hardware such as field-programmable gate arrays (FPGAs) might be required for a short period of time—for example, during the training of an extraordinarily complex ML model. To revisit the car example, would you rather purchase a van when you occasionally have to move furniture or rent a van for a day while moving?

Let’s summarize the chapter next.

Free Chapter

Free Chapter