Data generation is growing at an exponential rate. 90 percent of data in the world was generated in the last 2 years, and global data creation is expected to reach 181 zettabytes in 2022.

Just to put this number in perspective, 1 zettabyte is equal to 1 million petabytes. This scale requires data architects to deal with the complexity of big data, but it also introduces an opportunity. The expert data analyst, Doug Laney, defines big data with the popular three Vs framework: Volume, Variety, and Velocity. In this section, we would like to explore a fourth one called Value.

Types of analytics

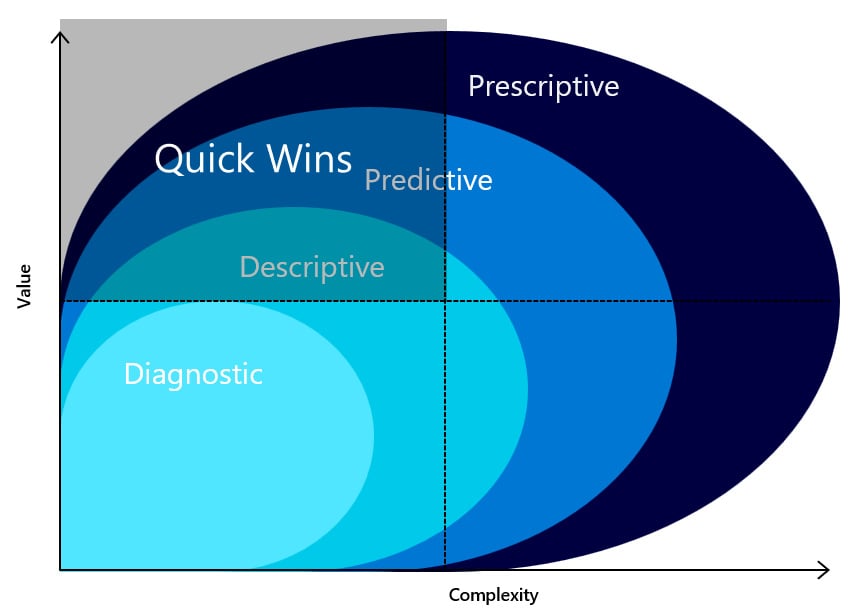

Data empowers businesses to look back into the past, giving insights into established and emerging patterns, and making informed decisions for the future. Gartner splits analytical solutions that support decision-making into four categories: descriptive, diagnostic, predictive, and prescriptive analytics. Each category is potentially more complex to analyze but can also add more value to your business.

Let’s go through each of these categories next:

- Descriptive analytics is concerned with answering the question, “What is happening in my business?” It describes the past and current state of the business by creating static reports on top of data. The data used to answer this question is often modeled in a data warehouse, which models historical data in dimension and fact tables for reporting purposes.

- Diagnostic analytics tries to answer the question, “Why is it happening?” It drills down into the historical data with interactive reports and diagnoses the root cause. Interactive reports are still built on top of a data warehouse, but additional data may be added to support this type of analysis. A broader view of your data estate allows for more root causes to be found.

- Predictive analytics learns from historical trends and patterns to make predictions for the future. It deals with answering the question, “What will happen in the future?” This is where machine learning (ML) and artificial intelligence (AI) come into play, drawing data from the data warehouse or raw data sources to learn from.

- Prescriptive analytics answers the question, “What should I do?” and prescribes the next best action. When we know what will happen in the future, we can act on it. This can be done by using different ML methods such as recommendation systems or explainable AI. Recommendation systems recommend the next best product to customers based on similar products or what similar customers bought. Think, for instance, about Netflix recommending new series or movies you might like. Explainable AI will identify which factors were most important to output a certain prediction, which allows you to act on those factors to change the predicted outcome.

The following diagram shows the value-extracting process, going from data to analytics, decisions, and actions:

Figure 1.1 – Extracting value from data

Just as with humans, ML models need to learn from their mistakes, which can be done with the help of a feedback loop. A feedback loop allows a teacher to correct the outcomes of the ML model and add them as training labels for the next learning cycle. Learning cycles allow the ML model to improve over time and combat data drift. Data drift occurs when the data on which the model was trained isn’t representative anymore of the data the model predicts. This will lead to inaccurate predictions.

As ML models improve over time, it is best practice to have human confirmation of predictions before automating the decision-making process. Even when an ML model has matured, we can’t rely on the model being right 100 percent of the time. This is why ML models often work with confidence scores, stating how confident they are in the prediction. If the confidence score is below a certain threshold, human intervention is required.

To get continuous value out of data, it is necessary to build a data roadmap and strategy. A complexity-value matrix is a mapping tool to help prioritize which data projects need to be addressed first. This matrix will be described more in detail in the following section.

A complexity-value matrix

A complexity-value matrix has four quadrants to plot future data projects on. These go from high- to low-value and low- to high-complexity. Projects that are considered high-value and have a low complexity are called “quick wins” or “low-hanging fruit” and should be prioritized first. These are often Software-as-a-Service (SaaS) applications or third-party APIs that can quickly be integrated into your data platform to get immediate value. Data projects with high complexity and low value should not be pursued as they have a low Return on Investment (ROI). In general, the more difficult our analytical questions become, the more complex the projects may be, but also, the more value we may get out of it.

A visualization of the four quadrants of the matrix can be seen as follows:

Figure 1.2 – The four quadrants of a complexity-value matrix

Often, we think of the direct value data projects bring but do also consider the indirect value. Data engineering projects often do not have a direct value as they move data from one system to another, but this may indirectly open up a world of new opportunities.

To extract value from data, a solid data architecture needs to be in place. In the following section, we’ll define an abstract data architecture diagram that will be referenced throughout this book to explain data architecture principles.

Free Chapter

Free Chapter