Learning Apache Spark 2

By :

Learning Apache Spark 2

By:

Overview of this book

Free Chapter

Free Chapter

Sign In

Start Free Trial

Sign In

Start Free Trial

As mentioned before, you can use Spark with Python, Scala, Java, and R. We have different executable shell scripts available in the /spark/bin directory and so far, we have just looked at Spark shell, which can be used to explore data using Scala. The following executables are available in the spark/bin directory. We'll use most of these during the course of this book:

beelinePySparkrun-examplespark-classsparkRspark-shellspark-sqlspark-submitWhatever shell you use, based on your past experience or aptitude, you have to deal with one abstract that is your handle to the data available on the spark cluster, be it local or spread over thousands of machines. The abstraction we are referring to here is called Resilient Distributed Datasets (RDD), and is a fundamental unit of your data and computation in Spark. As the name indicates, among others, they have two key features:

Chapter 2, Transformations and Actions with Spark RDDs, will walk through the intricacies of RDD while we will also discuss other higher-level APIs built on top of RDDs, such as Dataframes and machine learning pipelines.

Let's quickly demonstrate how you can explore a file on your local file system using Spark. Earlier in Figure 1.2, when we were exploring spark folder contents we saw a file called README.md, which contains an overview of Spark, the link to online documentation, and other assets available to the developers and analysts. We are going to read that file, and convert it into an RDD.

To enter Scala shell, please submit the following command:

./bin/spark-shellUsing the Scala shell, run the following code:

val textFile = sc.textFile("README.md") # Create an RDD called tTextFileAt the prompt you immediately get a confirmation on the type of variable created:

Figure 1.4: Creating a simple RDD

If you want to see the type of operations available on the RDD, at Command Prompt write the variable name textFile in this case, and press the Tab

key. You'll see the following list of operations/actions available:

Figure 1.5: Operations on String RDDs

Since our objective is to do some basic exploratory analysis, we will look at some of the basic actions on this RDD.

RDD's can have actions or transformations called upon them, but the result of each is different. Transformations result in new RDD's being created while actions result in the RDD to be evaluated, and return the values back to the client.

Let's look at the top seven lines from this RDD:

textFile.take(7) # Returns the top 7 lines from the file as an Array of StringsThe result of this looks something like the following:

Figure 1.6: First seven lines from the file

Alternatively, let's look at the total number of lines in the file, another action available as a list of actions on a string RDD. Please note that each line from the file is considered a separate item in the RDD:

textFile.count() # Returns the total number of items

Figure 1.7: Counting RDD elements

We've looked at some actions, so now let's try to look at some transformations available as a part of string RDD operations. As mentioned earlier, transformations are operations that return another RDD as a result.

Let's try to filter the data file, and find out the data lines with the keyword Apache:

val linesWithApache = textFile.filter(line => line.contains("Apache"))This transformation will return another string RDD.

You can also chain multiple transformations and actions together. For example, the following will filter the text file on the lines that contain the word Apache, and then return the number of such lines in the resultant RDD:

textFile.filter(line => line.contains("Apache")).count()

Figure 1.8: Transformations and actions

You can monitor the jobs that are running on this cluster from Spark UI, which is running by default at port 4040.

If you navigate your browser to http://localhost:4040, you should see the following Spark driver program UI:

Figure 1.9: Spark driver program UI

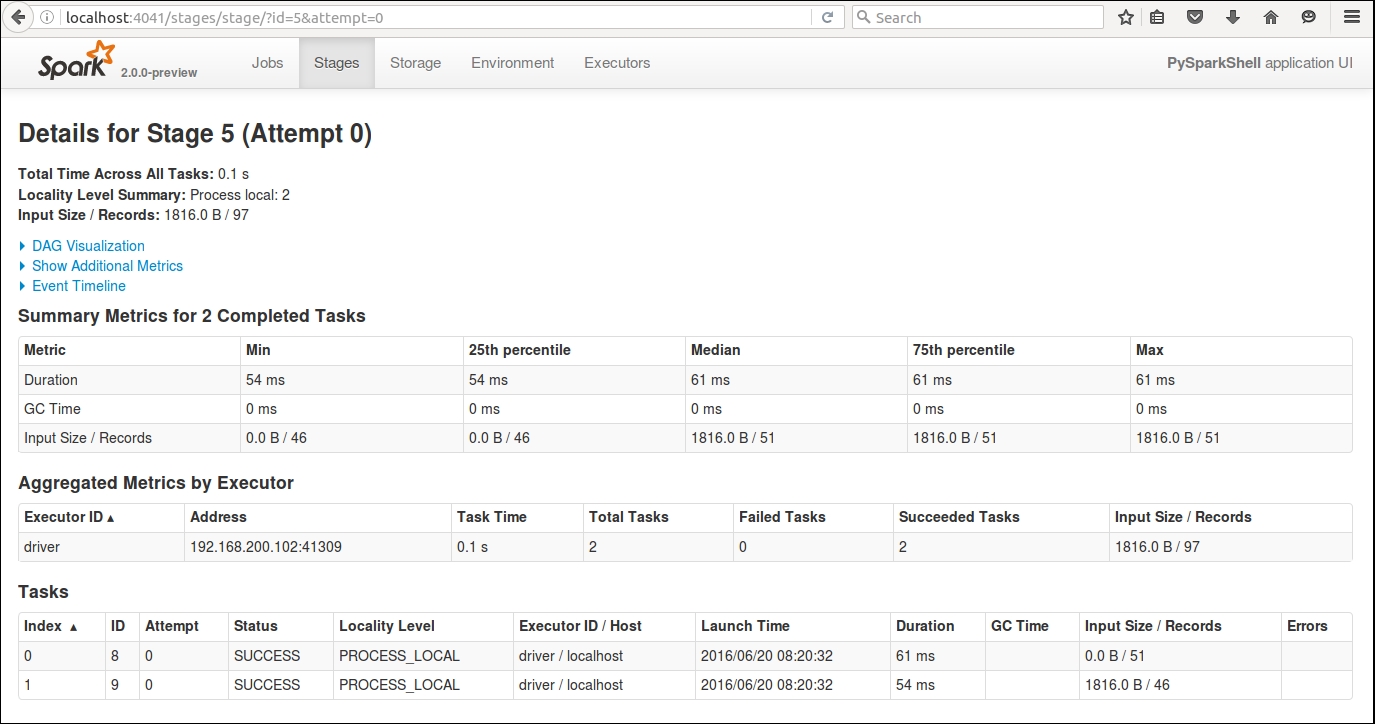

Depending on how many jobs you have run, you will see a list of jobs based on their status. The UI gives you an overview of the type of job, its submission date/time, the amount of time it took, and the number of stages that it had to pass through. If you want to look at the details of the job, simply click the description of the job, which will take you to another web page that details all the completed stages. You might want to look at individual stages of the job. If you click through the individual stage, you can get detailed metrics about your job.

Figure 1.10: Summary metrics for the job

We'll go through DAG Visualization, Event Timeline, and other aspects of the UI in a lot more detail in later chapters, but the objective of showing this to you was to highlight how you can monitor your jobs during and after execution.

Before we go any further with examples, let's replay the same examples from a Python Shell for Python programmers.

For those of you who are more comfortable with Python, rather than Scala, we will walk through the previous examples from the Python shell too.

To enter Python shell, please submit the following command:

./bin/pysparkYou'll see an output similar to the following:

Figure 1.11: Spark Python shell

If you look closely at the output, you will see that the framework tried to start the Spark UI at port 4040, but was unable to do so. It has instead started the UI at port 4041. Can you guess why? The reason is because we already have port 4040 occupied, and Spark will continue trying ports after port 4040 until it finds one available to bind the UI to.

Let's do some basic data manipulation using Python at the Python shell. Once again we will read the README.md file:

textFile = sc.textFile("README.md") //Create and RDD called textFile by reading the contents of README.md fileLet's read the top seven lines from the file:

textFile.take(7)Let's look at the total number of lines in the file:

textFile.count()You'll see output similar to the following:

Figure 1.12: Exploratory data analysis with Python

As we demonstrated with Scala shell, we can also filter data using Python by applying transformations and chain transformations with actions.

Use the following code to apply transformation on the dataset. Remember, a transformation results in another RDD.

Here's a code to apply transformation, which is filtering the input dataset, and identifying lines that contain the word Apache:

linesWithApache = textFile.filter(lambda line: "Apache" in line) //Find lines with Apache

Once we have obtained the filtered RDD, we can apply an action to it:

linesWithApache.count() //Count number of itemsLet's chain the transformation and action together:

textFile.filter(lambda line: "Apache" in line).count() //Chain transformation and action together

Figure 1.13: Chaining transformations and actions in Python

If you are unfamiliar with lambda functions in Python, please don't worry about it at this moment. The objective of this demonstration is to show you how easy it is to explore data with Spark. We'll cover this in much more detail in later chapters.

If you want to have a look at the driver program UI, you will find that the summary metrics are pretty much similar to what we saw when the execution was done using Scala shell.

Figure 1.14: Spark UI demonstrating summary metrics.

We have now gone through some basic Spark programs, so it might be worth understanding a bit more about the Spark architecture. In the next section, we will dig deeper into Spark architecture before moving onto the next chapter where we will have a lot more code examples explaining the various concepts around RDDs.

Change the font size

Change margin width

Change background colour