So far, we have looked at the operators that connect Apex pipelines to the outside world, to read data from messaging systems, files, and other sources and to write results to various destinations. We have seen that the Apex library has comprehensive support to integrate various external systems with feature rich connectors.

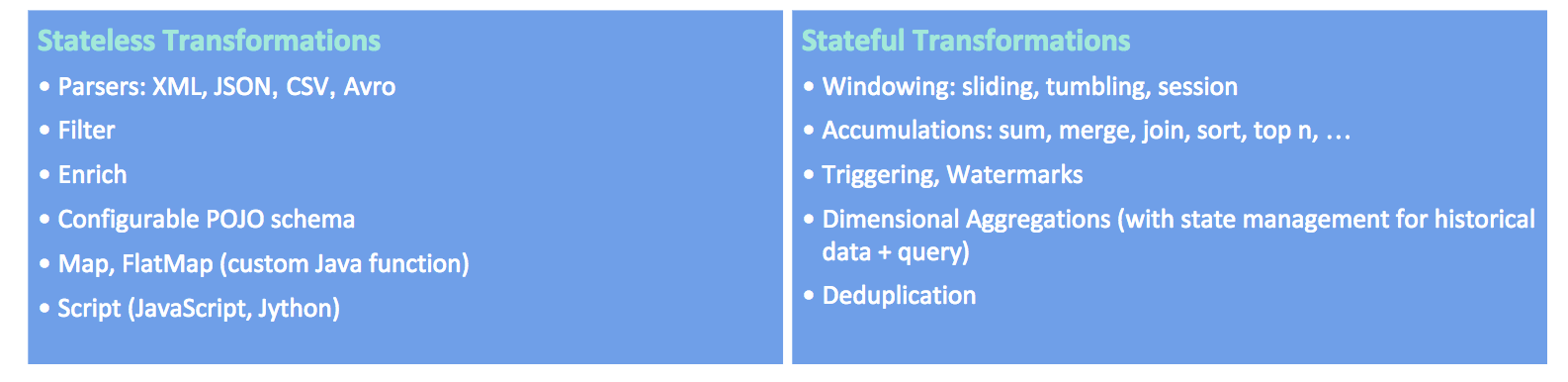

Now it is time to look at the support available for the actual functionality of the pipeline. These building blocks are transformations: their purpose is to modify or accumulate the tuples that flow through the processing pipeline. Examples of typical transformations are parsing, filtering, aggregation by key, and join:

The preceding diagram categorizes transformations into those that are applied to individual tuples and those that aggregate tuples based on keys and windows. Often, per tuple transforms are stateless and windowed transforms...